Amid the ubiquity of large language models (LLMs) and computer vision models, the artificial intelligence/machine learning (AI/ML) research community has coalesced around the concept of foundation models. These models learn from large-scale datasets, make predictions based on patterns in training data, and generalize predictions to new domains. Foundation models are scalable and effective across a range of tasks.

Perhaps because of this appeal, the phrase has begun appearing in classical computational science research. However, a Livermore-led team asserts that certain features driving the success of AI/ML foundation models are missing from computational science counterparts. Definitions matter for scientific rigor and consistency, and confusion blooms without a shared understanding of foundational model in a computational science context.

Researchers at LLNL’s Center for Applied Scientific Computing (CASC) kick off this conversation in a new position paper, “Defining Foundation Models for Computational Science: A Call for Clarity and Rigor,” available as a preprint. Youngsoo Choi, Siu Wun Cheung, Seung Whan Chung, Dylan Copeland, Coleman Kendrick, and William Anderson are joined by collaborators from Sandia National Laboratories, the Korea Institute of Science and Technology, Virginia Tech, University of Illinois Urbana-Champaign, and Rice University.

The team’s paper describes the characteristics of AI/ML foundation models, proposes an appropriate definition for computational science, and presents a framework—the Data-Driven Finite Element Method (DD-FEM)—that fulfills the definition in practice. Cheung explains, “The rise of AI/ML has led to interdisciplinary scientific research, and the lack of an agreed-on definition of ‘foundation model’ hinders communication between different fields. Our paper suggests one way to overcome this, but more importantly, we call for more thought about the properties of foundation models and designing them in this new landscape.”

Model of a Model

A robust foundation model in computational science must meet a high bar. Chung states, “The concept should be specific with defined characteristics so we can all make progress with the same standard.” The model should train on data from a broad range of applications and physical systems, perform well on problems it hasn’t solved before, scale as data volume increases, and adapt to new tasks with minimal modification.

The challenges of developing the ideal foundation model are considerable. An LLM’s data point (or token) is relatively small compared to data generated from a 3D multiphysics simulation, which has extensive processing and memory requirements. Although AI/ML models can draw from large public datasets, standardized datasets spanning heterogeneous physics domains are rare—and when available, they’re difficult to fine-tune for downstream tasks, which frustrates efforts toward model scalability and reuse.

Nevertheless, the team says the goal is reachable. “AI/ML models improve because they collect as much contextual data as possible,” notes lead author Choi. “We can apply the same principle to computational science models.”

Building a New Foundation

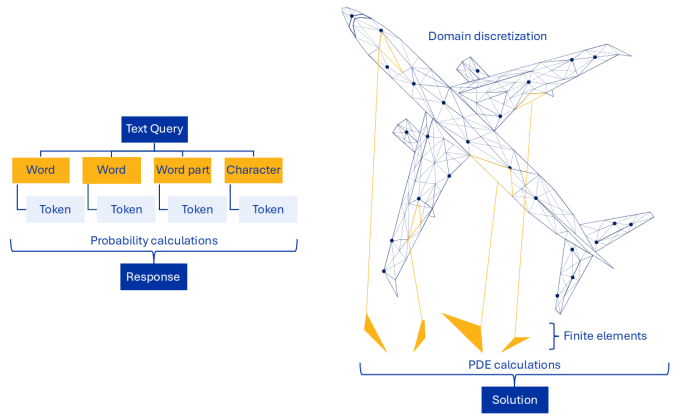

To introduce the DD-FEM framework, the team draws an analogy between foundation models in AI/ML and foundational methods in computational science. Mainstream LLMs parse the user’s text query into tokens, each numerically represented in high-dimensional space. Algorithms iteratively calculate the probability of each subsequent token, building a response one token at a time.

Similarly, Choi points out, “Solving a global physics domain directly is difficult, which is why computational science relies on techniques like the finite element method [FEM].” FEM algorithms first decompose the problem domain into discrete elements, solve the partial differential equations for each element using basis functions, and finally assemble these local solutions to form the complete, global solution. In other words, both LLM and FEM processes work from the top down, then from the bottom up. (See Figure 1.) Additionally, both paradigms are reusable and widely applicable across problems.

However, the crucial difference between the two is that FEM uses numerical formulations—not learned data representations—to produce a solution. So, FEM by itself doesn’t meet the foundation model criteria because it doesn’t train on data.

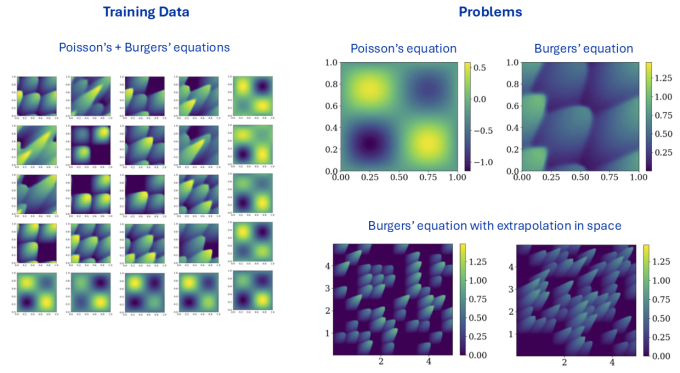

DD-FEM bridges this gap by replacing basis functions with data-driven bases. It generates training data from small subdomains of high-fidelity simulations, such as specific geometries, meshes, boundary conditions, and other elements. And because these pieces are small, they are computationally inexpensive, thus mitigating what would otherwise be a big data problem.

As this new model continues learning, extrapolation to larger and longer spatiotemporal regimes becomes possible. “Once the data-driven element is trained on a large dataset of small-scale subdomains, these local representations can be applied to many scenarios,” says Choi. For instance, the data that comes from solving Navier-Stokes equations on a tiny tetrahedral element can inform models of airflow around a wing, arterial blood circulation, or ocean currents.

Conversation Starter

Preliminary results with DD-FEM are promising, showing low error rates and significant speedups compared to traditional FEM baselines. (See Figure 2.) The team emphasizes that DD-FEM isn’t prescriptive but, rather, provides a blueprint for a flexible, reusable, and reproducible foundation model in computational science research.

Ultimately, the authors urge the computational science community to action as AI/ML paradigms become more prevalent in scientific research, including at LLNL. “If research groups proceed in different directions with what they call foundation models, it’s unclear to the rest of us examining the literature or conducting our own research. The community needs to know what’s happening and what to expect,” explains Anderson. Choi adds, “We are opening a dialogue so the community can come together around this concept of foundation models.” The team’s paper has already attracted attention from the computational science and AI/ML communities including invitations to present at industry and academic venues.

— Holly Auten