Despite the success and ambition of ML and AI solutions, data-driven techniques remain rather “dumb”: Whereas a child can distinguish a horse from a cow based on few examples, a typical computer vision system may require tens of thousands of examples. Artificial networks are highly task specific, have difficulty generalizing, and are now bigger than the number of biological neurons directly involved in specific tasks, such as learning new words. Beyond structure, training methods may affect networks’ capabilities.

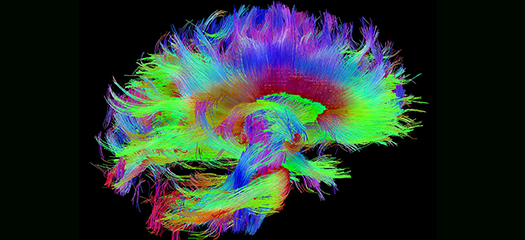

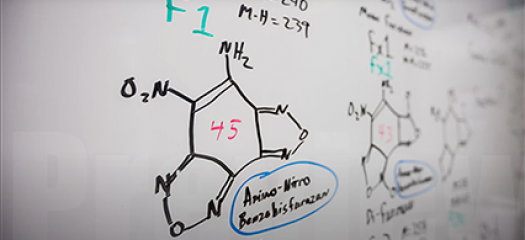

Alongside Georgetown University cognitive scientists, we are exploring the gap between machine and human intelligence. With data from human brain activity signals, we aim to understand the sequence of events that enable a person to learn and translate these insights into new brain-inspired training algorithms for artificial neural networks.

This project is an excellent example of multidisciplinary teams combining the latest in fundamental ML to explore radically new directions with high-impact applications.