In July 2025, Lawrence Livermore researchers participated in a Federal Energy Regulatory Commission (FERC) conference directed at identifying how improved modeling software can enable more efficient and safe power grid operations. Four of the Laboratory’s early-career researchers presented, highlighting their work in integrated computational modeling and advanced algorithm development to inform power grid operator optimization strategies and future infrastructure investments.

The work was performed under the direction of Jean-Paul Watson, a Distinguished Member of Technical Staff in the Laboratory’s Center for Applied Scientific Computing (CASC), who is also the associate program leader for the Disaster Resilience area within the Global Security (GS) Principal Directorate. “Power grid reliability and resiliency is an area that our newer, early career professionals are very enthusiastic about—they understand how important the work is to the national interest.”

The FERC-presented work is directly tied to Livermore’s energy security objectives, which include developing advanced computational tools for evaluating and informing critical infrastructure operations. It also supports the Department of Energy’s NAERM (North American Energy Resilience Model) project to improve predictions of how various threats could impact grid reliability and resiliency. The studies build upon previous Laboratory efforts aimed at using data-driven integration and computational modeling to find the most cost-effective investment strategies for bolstering the nation’s energy grid to meet demand and harden it against vulnerability.

Planning for Extreme Weather Events

The best plan for minimizing the cost of infrastructure investment and future operational costs must account for the grid’s ability to withstand threats, including uncertain extreme weather-related events. Juliette Franzman, a data scientist in the GS Computing Applications Division, illustrates the utility of integrated models for assessing the power grid reliability impacts of concurrent extreme heat waves and wildfires in the Western United States. “Concurrent events typically have a low probability and are therefore not well represented in the historical data used for power grid planning,” says Franzman. “However, in recent years, we have seen an increase in these types of events, so simulations and projections could help improve reliability studies for future planning.”

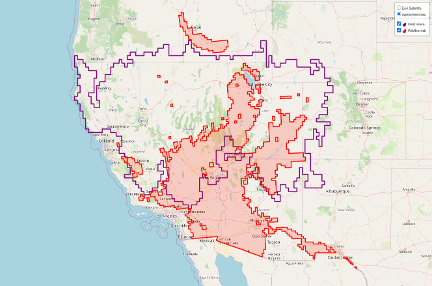

As standalone occurrences, extreme heat can trigger demand on electrical load—spurred by the need for air conditioning—while wildfires risk damaging grid components, such as transmission lines, or may require system operators to de-energize parts of the grid to avoid catastrophic failure. The combined impacts of both conditions may create a larger liability risk. To evaluate this possibility, the research team used high-resolution decadal projection data from E3SM (the Energy Exascale Earth Systems Model) to simulate potential heat wave scenarios over 100 years (from 2000 to 2099). Franzman says, “Once we identified an event that matched criteria for temperature, duration, and size—in this case, an area roughly the size of California—we compared heat wave events identified in the dataset with historical records and input those parameters into a model that forecasts expected power demand based on temperature.”

Wildfire risk maps—generated using the Fire Weather Index from Argonne National Laboratory’s ClimRR model—were then overlaid with the power grid data using a geometric computation to determine the most at-risk grid components. Franzman says, “We wanted to know which components would have the biggest impact on power grid reliability by maximizing load loss.” Heat wave and wildfire data were subsequently fed into a NAERM-based AC power flow model representing the U.S. Western interconnect to predict the combined impact on power reliability. “Our results showed a significant load loss of about 1 percent for the entire interconnect, resulting in the disconnection of multiple substations and increased voltage and branch violations.”

In the future, Franzman aims to incorporate more data points into the models, humidity and population density, for example, to further improve model performance. In addition, Franzman, who spearheads efforts to develop interactive maps of simulation results as tools for follow on investigations, would also like to see Livermore build up its own internal dataset for use in studies. “By building our own library, we reduce the expense of having to license the data from elsewhere. We could also improve data accuracy and share it with other national laboratories to more easily advance this important field of research.”

Increasing Grid Capacity

As power demand grows and the infrastructure landscape becomes ever-more complex, innumerable combinations of investment options and operational scenarios must be evaluated to guarantee future grid reliability and efficiency. At the FERC conference, Elizabeth Glista, a researcher in the Laboratory’s Computational Engineering Division (CED), and CASC postdoctoral researcher Tomás Valencia Zuluaga delved into the specifics of their work developing stochastic programming techniques for improving power grid capacity expansion planning.

Instead of deterministic modeling approaches that try to solve a specific problem for a single forecast of future conditions, stochastic programming incorporates uncertainty—weather data and power demand, in this case—to solve for larger-scale, real-world scenarios. However, accounting for this uncertainty within an electrical grid model that has tens of thousands of decision variables, including power lines, power plants, and substations, presents an extremely large, computationally intensive optimization problem.

“Much of our stochastic optimization work is focused on how to solve these larger problems in parallel using the Laboratory’s high performance computing (HPC) capabilities,” says Glista, whose FERC study applies stochastic programming to prioritize grid storage and generation projects awaiting connection to the transmission system. “Our goals are to use high-fidelity, long-term planning models that incorporate uncertainty to down-select projects in the interconnection queue and to better understand how the current interconnection process limits long-term investments and operations.”

In the United States, any new utility-scale storage or generation project, such as proposed wind and solar farms, must undergo a series of interconnection studies to determine if enough transmission capacity exists to operate and connect a particular generator or storage unit at a specific location. This assessment is a multiyear process that few projects successfully complete. To better inform which proposed generation and storage projects support resiliency and are the most cost-effective in a given power grid system, the team’s stochastic capacity expansion planning framework uses a two-stage, mixed integer model. Glista says, “The first-stage decisions are the set of infrastructure investments, and in the second stage, we optimize power system operation based on a fixed investment portfolio. The model aims to meet projected electricity demand at minimum cost for various future weather scenarios.”

For the FERC feasibility study, Glista and her colleagues applied the framework to the open-source California Test System (CATS), which includes more than 8,000 buses (power grid interconnection points) and 10,000 transmission lines. They mapped projects in current California interconnection queues to different buses in CATS and used stochastic capacity expansion planning to determine how much and what types of new generation and storage capacity would be most effective for specific sites.

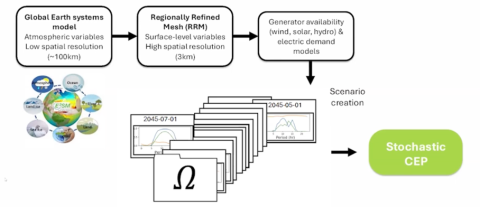

The team’s high-fidelity model incorporates E3SM data at 100-kilometer resolution and uses a regionally refined mesh to simulate more detailed topology of a geographic area (in this case, California) at 3 kilometers. The future weather data is used to provide estimates for future availability of wind, solar, and hydropower as well as future electric power demand. Combining this weather data with the existing CATS power system and technoeconomic data on future investments, the stochastic capacity expansion framework links all these fixed and random parameters to mathematical equations that are solved for various investment and operational scenarios, incorporating constraints such as resource availability and land use restrictions.

“The end goal is to upgrade the model so that it can accurately capture uncertainty quantification metrics and scenario reduction techniques to increase confidence in our results and inform real decisions for future grid planning,” says Glista. Toward that goal, Valencia Zuluaga, whose background is in industrial engineering and operations research, is studying alternative representations of the power flow equations to reduce computational bottlenecks within the team’s stochastic programming algorithms. He says, “Our power grid model uses a combination of binary, continuous, and integer variables to account for all possible outcomes, but the presence of these integer variables in the formulation makes the problem very challenging to solve at larger scale.”

The team’s stochastic optimization techniques must account for a wide range of operational scenarios; for instance, variations in temperature, wind speed, and solar variance, and how those factors will affect power generation, storage, and demand on an hourly basis over a one-year timeframe. Valencia Zuluaga’s initial work on the model focused on ways of decomposing (for example, via coordination algorithms such as progressive hedging) the overarching optimization problem into smaller subcomponents. This technique allows individual pieces to run faster and in parallel on the Laboratory’s computing clusters. “Each of those days and conditions is calculated separately, with different compute clusters calculating the optimal investment plan for each case,” he says. “Ideally, we decompose the problem across scenarios and representative days and run iterations of the algorithm until they agree on one investment plan that works best concurrently across all the scenarios and representative day combinations.”

Valencia Zuluaga’s initial work in this area solved for smaller grid models with more simplified representations of the underlying physics as they built out the model’s capabilities. For the FERC study, he focused on how the model could be improved to better depict transmission capacity limits to ensure the optimal investment plan does not lead to transmission line overload in the system. “We are trying to run higher fidelity simulations that accurately incorporate how electricity flows through the grid, which is dependent on the physics, such as the attributes of the wires, and representing those physics, including energy balance and power flow constraints in a way that the problem doesn’t explode computationally,” he says. The approach combines the PTDF (Power Transfer Distribution Factor) matrix—a technique for quantifying how a change in power injection at one bus affects power flow on each transmission line—with scenario decomposition and progressive hedging to better enforce transmission limits and reduce the problem size.

“We had positive results on an intermediate-size test case based on South Carolina, which considers 500 buses, showing that the stochastic model incorporating PTDF for expansion planning takes significantly less time to solve than a more traditional approach for modeling power flow,” notes Valencia Zuluaga. The goal is to eventually apply it to the much larger California grid. “We start with something smaller because as we implement it, we can fix bugs and errors early on and improve the software implementation before building out to the larger problem.” Valencia Zuluaga is working with colleagues to upgrade the model, including ways to improve the tradeoffs between optimality guarantees and compute time savings.

Revealing Diverse Solution Sets

Ignacio Aravena Solis, a Livermore researcher and leader of CED’s Optimization and Control group, along with colleagues at Georgia Tech and the University of California at Berkeley, is applying a novel algorithm for solving mixed integer linear equations to the problem of unit commitment, which determines the most cost-effective strategy for turning power generating units on and off to meet forecasted electricity demand while maintaining operational reliability on a daily basis. The work supports NAERM efforts to develop tools that improve reliability and resilience metrics.

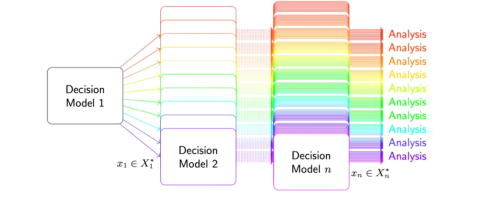

Unit commitment is a high-dimensional optimization problem, one that involves potentially billions of solutions within a numerical tolerance. Tools for solving optimization models use enumeration to provide a single output—a single setting for power producers, for example—and that output is then used to run additional analyses, such as for stability or security simulations. “However,” says Aravena Solis, “within numerical tolerances, another solution may exist that is completely different from the previous one and that supports a different conclusion in our posterior analyses. In the case of unit commitment, the two solutions might support different prices, which result in major cost differences for consumers. The method I’ve been developing uses a novel partitioning algorithm to explore high-dimensional mixed integer polyhedral spaces and generate diverse subsets of acceptable solutions within those spaces.”

Solutions for mixed integer problems are typically represented as points (or vertices) on a high-dimensional shape, such as a polyhedron. Inequalities (or constraints) are represented as hyperplanes that restrict such space. Intersections between these hyperplanes can generate fractional vertices that significantly reduce the performance of traditional solvers. Common approaches to the problem of generating multiple solutions tend to generate many of these fractional points, making them impractical for industrial-scale problems. Alternatively, the proposed algorithm uses two points separated by the farthest distance to generate splitting restrictions, directing the search to a far-apart subregion but nothing in between, to provide the tightest possible representation of the space while aiming for maximum diversity. The process is repeated, each time partitioning the space more tightly. He says, “Our method provides a simpler description for the same points, reducing the number of fractional vertices in the problem, allowing us to solve the problem faster and more efficiently.”

Previous efforts applying the customary optimization methodology to the unit commitment problem showed that large variations existed in prices depending on the solution. Aravena Solis says, “In comparison to the baseline technique, our new method finds solution sets that are 25 to 50 times more diverse than was possible to find before with similar computational effort.” The next steps for Aravena Solis and colleagues are to analyze the results of their unit commitment run to see how well the algorithm performed in identifying the actual diversity of the solution set and to expand its capabilities.

Aravena Solis is quick to point out that while the FERC presentation focused on using this methodology for unit commitment, it has broad application and importance within the Laboratory and beyond. “There is a lot of variance in the solution space that is accepted by these mixed integer mathematical formulations, and if we don’t look at what an alternative solution might have implied for our analysis or recommendations, we are ignoring possible answers that might be essential to find the best or most comprehensive solution to a problem,” he says. “Our advanced algorithm provides a tool for identifying which applications may have a high number of variants so that critical alternative solutions aren’t overlooked.”

Just the Beginning

The work presented at this year’s FERC conference showcased how Laboratory early-career researchers are applying their expertise and technical know-how to address major challenges related to power grid reliability and resiliency. The safety and stability of the grid is a national priority, and conferences such as FERC are a good way to collaborate and broaden research perspectives. Watson says, “Presenting at the FERC conference gave our researchers the opportunity to engage with the power industry to better understand how the national laboratories can utilize our capabilities to meet their needs.”

—Caryn Meissner