Disclaimer: This article is more than two years old. Developments in science and computing happen quickly, and more up-to-date resources on this topic may be available.

Machine learning techniques are increasingly being used in the sciences, as they can streamline work and improve efficiency. But these techniques are sometimes met with hesitation: When users don’t understand what’s going on behind the curtains, they may lack trust in the machine learning models.

As these tools become more widespread, a team of researchers in LLNL’s Computing and Physical and Life Sciences directorates are trying to provide a reasonable starting place for scientists who want to apply machine learning, but don’t have the appropriate background. The team’s work grew out of a Laboratory Directed Research and Development (LDRD) project on feedstock materials optimization, which led to a pair of papers about the types of questions a materials scientist may encounter when using machine learning tools, and how these tools behave.

Trusting artificial intelligence is easy when its conclusion is a simple ground truth, like identifying an animal. But when it comes to abstract scientific concepts, machine learning can seem more ambiguous.

“There’s been a lot of work applying machine learning to natural images—cats, dogs, people, bicycles,” says Brian Gallagher, one of the project members and a group leader in the Center for Applied Scientific Computing. “These are naturally decomposable into parts, like wheels or whiskers. Those kinds of explanations aren’t meaningful here; there aren’t subparts to decompose into.”

Though scientific data does feature various types of images—graphs and microscopy, for example—it also includes many other data types. This complicates not only the machine learning process, but also the explainability of this process for scientists who want to understand it.

Learning Machine Learning

There are two extremes when it comes to learning about machine learning: Tutorials tend to be either too basic, making implementation difficult in a particular application, or they’re too specific to a certain scientific problem. Neither of these is generally useful.

“Machine learning is very empirical. There’s very little theory guiding it, unlike a lot of science,” Gallagher explains. “You train a model, test it in the scenarios you care about, and it either works or it doesn’t.”

This is where explainable artificial intelligence (XAI) comes in. XAI is an emerging field that helps interpret complicated machine learning models, providing an entry point to new applications. XAI may use tools like visualizations that identify which features a neural network finds important in a dataset, or surrogate models to explain more complex concepts. In their recent paper, Gallagher and the LDRD team present a number of different XAI tools along with examples of how to apply them to materials science.

Without XAI, scientists are expected to blindly trust a neural network’s conclusions without fully understanding how these conclusions were reached.

“That’s not very satisfying to scientists who, by nature, want to have explanations for everything,” Gallagher comments. He says machine learning techniques can be even further integrated into scientific workflows if XAI is used to help illustrate how these processes work.

Materials Science and Beyond

According to Anna Hiszpanski, a materials scientist on the LDRD project and co-author on the papers, over 50 projects in Physical and Life Sciences utilize machine learning and artificial intelligence. These range from battery development to creating structural components, and they use many different types of materials, including metals, composites, polymers, and small molecules.

All these applications have one thing in common: “We’re trying to use machine learning to reduce the timeline we have for developing new materials,” says Hiszpanski.

To this end, a trained model can predict the properties of a molecule or polymer before it is synthesized. This helps ensure the material will have any specific desired features, while reducing the time spent on trial and error.

“Usually, when you’re trying to come up with a new material, you want something outside the norm. You want some ‘ultra’ property—ultrahigh or ultralow,” Hiszpanski explains. “Trying to figure out what you need to change about the chemistry to give you that ‘ultra’ property is hard. That’s what scientists try to develop and have hypotheses for, but explanations provided by machine learning are helpful and can help build confidence.” For example, a trained machine can tell the scientists about apparent correlations between various properties in a molecule—how tweaking one characteristic can affect another to improve the material.

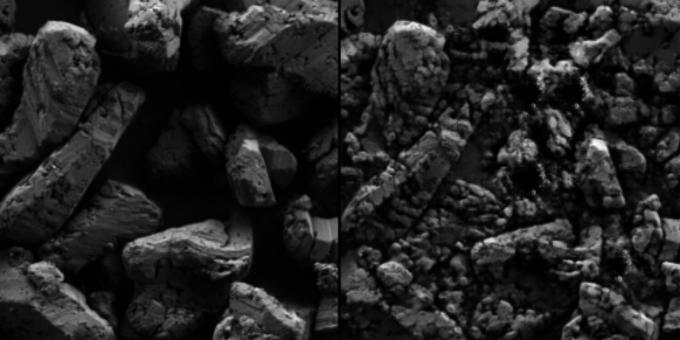

Here at the Lab, this technique has been used to predict the strength performance of a modified powder without having to manufacture the modifications for testing. The powder in question is triaminotrinitrobenzene (TATB), an insensitive high explosive, which means it can withstand extreme conditions without detonating. The team trained a neural network on real scanning electron images of TATB to understand how its strength scales, and then asked the network to predict what a stronger version of TATB would look like. In response, the XAI algorithm created artificial scanning electron images of a finer powder. It broke down larger particles into smaller pieces, and filled in empty spaces with even smaller grains. This provided the scientists with something interpretable: It told them that smaller pieces of TATB would perform better in terms of strength, giving them a focused direction for what they should be trying to make.

Though machine learning is a helpful scientific tool, it cannot fully replace the human element. Computers can quickly analyze a large amount of data, but individual experts are still needed to interpret the information and use it to generate new scientific hypotheses.

“We have these digital tools to help us decide what to make, but we still have to figure out how to make it, and then actually make it,” Hiszpanski says. She sees the advent of XAI tools as an opportunity for an exciting new research direction.

—Anashe Bandari