Disclaimer: This article is more than two years old. Developments in science and computing happen quickly, and more up-to-date resources on this topic may be available.

LLNL continues to make an impact at top machine learning (ML) conferences, even as much of the research staff works remotely during the COVID-19 pandemic. Postdoctoral researcher James Diffenderfer and computer scientist Bhavya Kailkhura, both from LLNL’s Center for Applied Scientific Computing (CASC), are co-authors on a paper—“Multi-Prize Lottery Ticket Hypothesis: Finding Accurate Binary Neural Networks by Pruning a Randomly Weighted Network”—that offers a novel and unconventional way to train deep neural networks (DNNs). The paper is among the Lab’s acceptances to the International Conference on Learning Representations (ICLR 2021), which takes place May 3–7.

Diffenderfer states, “We’ve discovered a completely new way to train neural networks that can be made less computationally expensive and reduces the amount of necessary compute storage.” The work is part of Kailkhura’s Laboratory Directed Research and Development project on trustworthy ML. Robustness and reliability of ML models have become more important as national security applications increasingly rely on artificial intelligence and related technologies. Finding efficiencies in DNNs, while ensuring their accurate predictions, is one avenue to making ML models more reliable.

The Ticket to Deep Learning

Deep learning (DL) algorithms learn from features in data without being explicitly trained to do so, and DL has become a crucial tool in scientific research. However, as these models grow in size, they consume massive amounts of compute resources including memory and power. “The current trend is to dedicate a lot of computer power to training a deep learning model for high performance,” Kailkhura notes. “Training a single model can generate as much of a carbon footprint as multiple cars in their lifetime.”

So, what is the most efficient way to create trustworthy NNs? The CASC team turned to an ICLR 2019 paper from the Massachusetts Institute of Technology, which described the lottery ticket hypothesis. This relatively new concept in ML robustness proposes that pruning of subnetworks within an NN can match the test accuracy of the original, dense network with iterative training. The “winning ticket” is the subnetwork that has been pruned with a weight-training paradigm wherein the model iteratively learns the values of weighted parameters by stochastic gradient descent.

Diffenderfer explains, “The original winning ticket was discovered using an iterative loop of training and pruning.” Although the resulting subnetwork is 80–90% smaller than the original with the same accuracy, iterative pruning is still computationally expensive. He continues, “We determined that you can find a different type of winning ticket using this iterative training and pruning loop. You can—and we did—do it in a single shot.” Additionally, the NN can be compressed even further by storing the weights in a single bit instead of in a floating-point representation (32 bits).

Winning Multiple Prizes

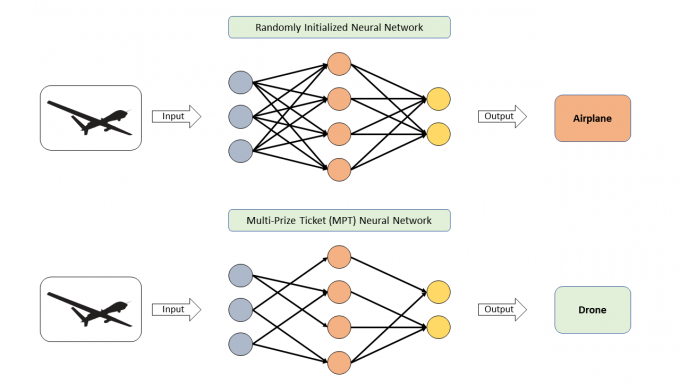

In sharp contrast to their predecessors’ approach, the CASC team shows both theoretically and empirically that it is possible to develop highly accurate NNs simply by compressing—i.e., pruning and binarizing—randomized NNs without ever updating the weights. “Binary networks can potentially save on storage and energy as they consume less power,” Diffenderfer says.

In this process, the team proves what they call the multi-prize lottery ticket hypothesis: Hidden within a sufficiently large, randomly weighted NN lie several subnetworks (winning tickets) that

- have comparable accuracy to a dense target network with learned weights (prize 1);

- do not require any training to achieve prize 1 (prize 2); and

- are robust to extreme forms of quantization (i.e., binary weights and/or activation) (prize 3).

In several state-of-the-art DNNs, the research found subnetworks that are significantly smaller at only 5–10% of the original parameter counts and keep only signs of weights and/or activations. These subnetworks do not require any training but match or exceed the performance of weight-trained, dense, and full-precision DNNs. The team’s paper demonstrates that this counterintuitive learning-by-compressing approach results in subnetworks that are approximately 32x smaller and up to 58x faster than traditional DNNs without sacrificing accuracy.

In other words, the multi-prize paradigm offers compression that is just as powerful as training the initial NN. These subnetworks perform well when tested on the CIFAR-10 and ImageNet datasets, and can serve as universal approximators of any function given a sufficient amount of data. Kailkhura points out, “Multi-prize tickets are significantly lighter, faster, and efficient while maintaining state-of-the-art performance. This work unlocks a range of potential areas where DNNs can now be applied.” Such resource-intensive areas include collaborative autonomy; natural language processing models; and edge computing applications in environment, ocean, or urban monitoring with cheap sensors.

—Holly Auten