At LLNL, data isn’t just data; it’s collateral, and the Laboratory’s high performance computing (HPC) users produce massive quantities of it, averaging 30 terabytes (the equivalent of 58 years’ worth of digital music) every day. Not only must the data be kept indefinitely, but much of it is also classified. The challenge is that desktops, servers, and local file systems are limited not only in size but also security, being subject to the vagaries of power supplies, bugs, hackers, and purges. The solution, then, is to archive.

The good news is that LLNL is home to one of the world’s preeminent data archives, run by Livermore Computing’s (LC’s) Data Storage Group. The group is tasked with meeting some of the most complex archiving demands in the world. As Todd Heer, the Data Storage Group’s High Performance Storage System (HPSS) Executive Committee representative, describes it, “Along with data preservation requirements at huge scale, we have the added competing requirement of providing performance commensurate with an HPC center that houses the world’s fastest supercomputers.”

Toward that end, Livermore’s archive leverages HPSS, a hierarchical storage management (HSM) application that runs on a cluster architecture that is user-friendly, extremely scalable, and lightning fast. The result: Vast amounts of data can be both stored securely and accessed quickly for decades to come.

HPSS—Born in Livermore

If HPSS sounds ideally suited to the needs of Lawrence Livermore, that’s because the technology was developed here. HPSS is the brainchild of LLNL’s own Dick Watson, who also helped develop technologies such as email and network packet switching during his time at SRI International, working with DARPA in the early ‘70s.

In 1992, Watson began an ongoing collaboration between LLNL, three other government laboratories, and IBM to develop a scalable and efficient data-archiving system. Written in the C programming language, LLNL’s HPSS-based archive could store billions of objects long before any competing systems. In 1997, HPSS won an R&D 100 Award, and it is now widely adopted by some of the largest, most notable data-crunching organizations around the world.

How It Works

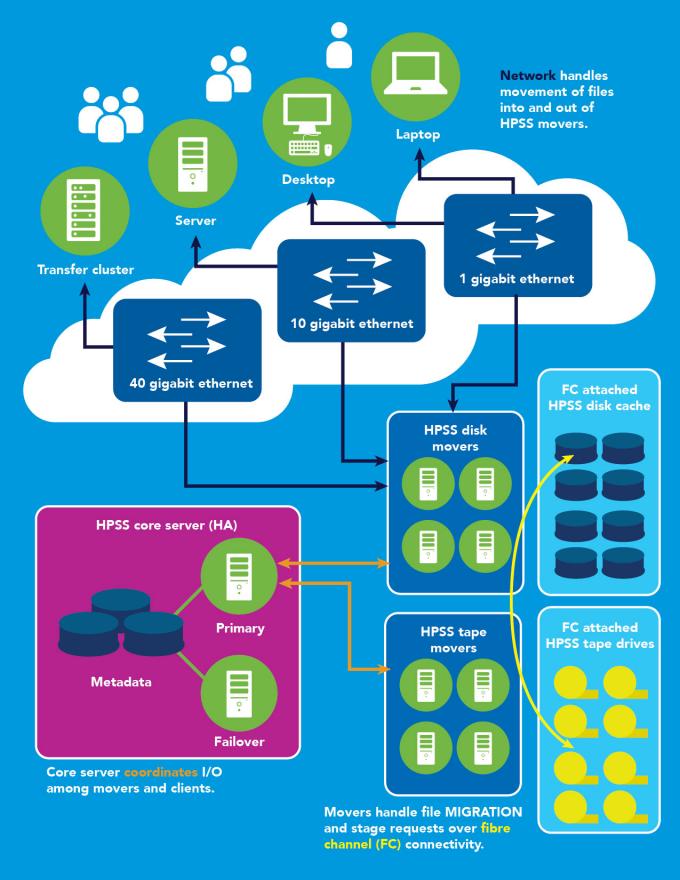

In layperson’s terms, clients—be they laptops or high performance computers such as Sierra—have some form of disk-based file system, which is close, fast, and suitable for short-term storage. A data archive, suitable for long-term storage, lives farther away in the local area network. With HPSS specifically, the archive consists of (1) hardware, namely some number of servers, disk cache, tape drives, and cartridge libraries with robotics that retrieve the tapes, and (2) a software application, specifically an HSM policy engine that manages the data flow.

Through the use of one of several different interfaces, either graphical or command line, a user selects files to be archived and the transfer begins. The files land on the first tier of the archive, the fast access disk cache, at which point the user is free to remove the files from local storage. From the cache, HPSS migrates copies of the files onto tape, the second archival tier. Even then, the files remain in the cache for a grace period, which at LLNL is around 300 days, up from 20 thanks to a recent major enhancement.

During this grace period, when a user requests a file from the archive, the time-to-first-byte is instantaneous. Otherwise, a robot grabs the relevant tape and mounts it in a nearby drive to transfer the data up through the disk cache and out to the user, with a time-to-first-byte average of around a minute.

Tape drives may seem archaic, but when it comes to non-random-access, they can stream faster (540 megabytes per second in Livermore’s deployment) and cost less than their disk-based counterparts. In fact, advancements in tape have outpaced disk technology over the last 10 years. They hold the integrity of data more reliably than disks, and they store significantly more data. Furthermore, a tape cartridge uses no power while it sits in its cell waiting to be read.

Technologists peering under the hood of Livermore’s archive system would see the first use of a 40-gigabit Ethernet between clients and the disk movers, moderated by two core servers (one primary, one failover) that use metadata to coordinate the flow of data between the two. Disk movers are direct connected via fibre channel (FC) to the disk cache. Tape movers are similarly direct connected to their assigned tape drives, which exist in tape libraries that utilize “any cartridge/any slot technology” to maximize flexibility and efficiency.

HPSS scaling allows for efficient direct connect FC, meaning costly storage area network switching technology can be avoided. Components are redundant and hot-swappable, enabling service 24 hours per day to accommodate the data-intensive HPC work that occurs around the clock at Livermore.

To get a sense of the scale, consider that LLNL has a complement of Spectra Logic TFinity tape libraries, including a 23-fame unit: the world’s longest (see leaderboard image at top of page). At Livermore’s current tape drive generation and degree of compression, each library complex can store upwards of 640 petabytes. That’s enough space to hold all the written works of humanity thirteen times over. At all times during the day, hundreds of tape drives are storing, migrating, and remastering data in parallel.

An Ongoing Collaboration

Livermore’s Data Storage Group takes their stewardship of the Laboratory’s data jewels seriously. “Our goal is to keep scientists from having to wrestle with their data,” says Heer. A key aspect of their work is finding and fixing problems before they can affect users.

This aspect is the nexus of the ongoing collaboration between LLNL and those involved in the development of HPSS. From the beginning, LLNL has served as not only the inspiration for this technology, but also its primary tester and refiner. Many factors make LLNL’s Data Storage Group superb beta testers of the components of the archiving system.

First, as co-developers, they are early adopters of the system and its component technologies. Second, the massive amount of data the Laboratory generates constantly tests the capacity of the system and causes obscure technical problems to occur sooner than they would with other users. As Heer describes it, “More often than not, we’re at the tip of the spear.”

Indeed they are. For instance, the group recently worked all night with a vendor to diagnose and fix what turned out to be, Heer quips, “an undocumented feature” of one of the system’s components. Similarly, when a large internet search engine company had problems handling their tape operations at scale, they came to the Livermore team for advice.

While LLNL proves to be a valuable partner to vendors and other significant adopters of HPSS technology, the collaboration also benefits the Laboratory by providing its Data Storage Group the opportunity to develop finely honed diagnostic skills that can be applied to other computing problems and thus further support Lawrence Livermore’s national security mission.

Moving forward, Heer sees the LLNL installation of HPSS benefiting naturally from industry advancements in hardware technologies such as memory, CPUs, networks, and storage devices. Likewise, the metadata server software is undergoing significant updating to enable it to scale out as the movers already do, so it will keep up with the increasing demands of the exascale era.

--Lothlórien Watkins, LLNL