The scenario is familiar to HPC centers, like LLNL’s, that run large scientific codes on next-generation machines: Limited memory resources require smart memory pools, but each vendor’s hardware allocates memory differently. Performance can suffer.

Umpire is an application-focused API for faster, more efficient memory management on non-uniform memory access (NUMA) and GPU architectures. This open-source tool determines the best way to allocate memory to available nodes and resources, so users don’t have to. Moreover, users don’t need hardware expertise or another version of their code for different machines.

Umpire creator David Beckingsale explains, “We’re challenging traditional memory pool implementation in order to support the range of hardware in our HPC center. Instead of forcing developers to commit to one technology-specific implementation, Umpire’s unified API manages the dynamic memory allocation across all of our platforms by creating a memory resource for everything it detects on each system. It’s easy to write reusable memory management code that will accommodate multiple resources.”

At LLNL, Umpire is used on a wide variety of systems with a number of configurations. Many key simulation codes—from stockpile stewardship to seismic monitoring—rely on Umpire to handle memory allocation on heterogeneous supercomputers like Sierra.

Key Features

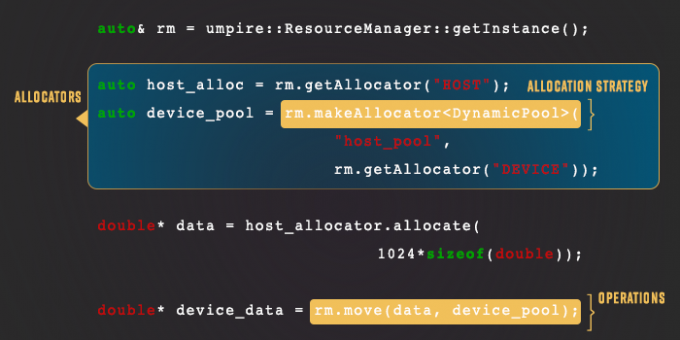

Umpire’s simplified UI sits on top of a flexible allocation process, and the interface is always the same regardless of which resource is housing the memory. Allocators are the fundamental object used to allocate and deallocate memory, encapsulating all allocation details.

Custom algorithms can be applied with strategies. These strategies can control many tasks, such as providing different pooling methods to speed up allocations or applying different operations to every allocation. Strategies can be composed to combine their functionality, allowing flexible and reusable implementations of different components.

Operations provide an abstract interface for modifying and moving data between Umpire and allocators. For example, the move operation enables users to transfer memory between two GPU addresses or from GPU to CPU. Umpire comes with several strategies and more than a dozen operations baked in.

Additionally, Umpire’s introspection capability keeps track of what is allocated where. Users working with complex memory hierarchies can query Umpire for platform name, allocation size, and more.