Disclaimer: This article is more than two years old. Developments in science and computing happen quickly, and more up-to-date resources on this topic may be available.

Watch a video about this hackathon and view the Flickr photo album.

After 10 years, the “try something new” spirit of Computing’s seasonal hackathon is alive and well. The fall 2022 event featured an Amazon Web Services (AWS) DeepRacer machine learning (ML) competition, in which participants used a cloud-based racing simulator to train an autonomous race car with reinforcement learning (RL) algorithms. The hackathon was open to all LLNL employees, with the top finishers receiving AWS sweatshirts.

The hackathon was sponsored by LLNL’s Office of the Chief Information Officer (OCIO); the Applications, Simulations, and Quality Division; and the Global Security Computing Applications Division. Organizers were Valerie Noble, John Lee, Amber Hartman, Stephen Jacobsohn, Emilia Grzesiak, Josiah Yoshimura, Ashley Basso, and Nicole Armbruster.

The hackathon provided a unique opportunity to combine cloud, data science, and computing technologies. Noble, who recently became LLNL’s deputy CIO for cloud transformation, explained, “Part of my new role is to engage the mission in use of our institutionally deployed AWS platform, LivCloud. I’ve met with data science teams who have already started working with AWS to establish ML/data analytics capabilities to support Laboratory Directed Research and Development Program projects. To help increase awareness of the ways LivCloud could be leveraged for this work, as well as provide our employees with an opportunity for hands-on ML-learning, I thought it might be fun to host an ML-focused hackathon.”

Revving up Virtual Engines

In RL, an agent (car) learns from interactions with its environment (track) and learns how to maximize the rewards (numeric score) from doing so. The DeepRacer driver defines reward functions that specify desirable or undesirable actions in a given state along the track. The simulator lets drivers modify 13 parameters in the reward function, then train their cars on multiple models to build up experience. As each model is updated, it creates more experiences that help train the car to drive itself—for example, a section of the environment outside the track has a lower score than the along the center line. Over time, the car learns that veering outside its lane is not as rewarding as staying in the middle.

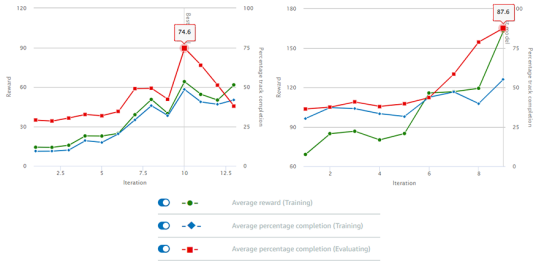

The DeepRacer track doesn’t run in a straight line, so calibrating certain parameters—such as the car’s steering angle, its distance from the center line, and whether all four wheels are on the track—is important. Drivers can choose how long to train each model, and model performance is graphed after each training period is over. Well-calibrated and -trained models have a higher completion percentage. Each LLNL driver had 25 hours to train their models with the AWS simulator.

Simulator Strategies

Working in teams or individually, drivers trained their cars for a time trial—the fastest car wins—and submitted their models ahead of race day. Derrick Aragon, a web developer who transitioned from intern to full-time staff a few days after the hackathon, collaborated with colleagues in Computing’s Enterprise Application Services (EAS) Division. “We decided to work together as a team when training our models, then submit our results individually,” he said. While a student at Chico State University, Aragon learned about convolutional neural networks and other ML fundamentals—knowledge he brought to bear on his team’s approaches to the racing simulator.

“It was a cool feeling to be the new guy with precious information to share,” he noted. “And I had fun tinkering with the code. We exploited the rule about keeping one wheel on the track at all times and were able to reward the car for being partway inside the lane, which helped it take a shorter path.” The EAS team also tried rewarding the car for staying left of the center line, going as fast as possible on straightaways, and going fast without being completely off-track during the turns. “We learned that it’s possible to overtrain the car, like being an overwhelming parent. You have to let the kid learn from its mistakes,” added Aragon, who described the simulator as being both frustrating and addictive. “The car would run perfect laps, then there would be chaos.”

Data scientist Mary Silva decided to participate at Grzesiak’s urging. She stated, “It seemed like a fun way to learn RL and see the results of our algorithm live on the racetrack. Also, it was my first in-person hackathon.” Silva, who was hired in 2020 after interning with LLNL’s Data Science Summer Institute in 2019, teamed up with Grzesiak to create several DeepRacer models. They adjusted the car’s speed, training time, and rewards for track navigation, and their best-performing algorithm turned out to be the one that rewarded the car for traveling “slow and steady.” Silva pointed out, “Resetting the vehicle whenever it fell off course added time, which happened more often when it went too fast.”

The duo ran some of their models overnight in the week leading up to race day. Grzesiak explained, “We learned to iterate quickly to get a feel for which adjustments worked best. Don’t go into a long training period until you’re sure your RL model will be worth the extra time.”

Curiosity compelled Leanne Law, an electrical power distribution system design engineer at the National Ignition Facility, to participate in the hackathon. “I like to learn new skills. I had no prior ML experience, but the online simulator was easier to use than I expected,” she said. Law combined an algorithm that limited the car’s zig-zag motion with one that followed the innermost path around the track. She also trained the car with faster speeds, lower discount factor, and limited the steering angle for right turns, as the track had only one right turn. “ML is a very powerful tool. I tried to understand all the parameters we could adjust in the simulator, then researched how to apply those settings,” Law added.

The Winners Circle

The AWS team set up the physical track in the parking lot of LLNL’s Livermore Valley Open Campus, where drivers took turns running their models with the DeepRacer car. The world record run for this specific track layout is 7 seconds. Law placed first with a winning time of 8.873 seconds. Silva and Grzesiak came in next with a time of 9.658 seconds, while Aragon finished third in 9.872 seconds. Rounding out the top five were Josh DeOtte (10.702 seconds) and JoAnne Levatin (11.204 seconds).

Law had no expectations going into the competition and was surprised to win. “Some people may have been too intimidated by ML to participate, but ML is not that difficult to learn,” she stated, adding in true hackathon spirit, “I’m used to solving problems and thinking through challenges.”

The race day atmosphere was exciting for Silva. She recalled, “We were cheering for each other like at a sporting event. Everyone was kind of holding their breath to see who would win. I was just grateful that our car finished and had no idea it would do as well as it did.” Grzesiak added, “I didn’t know what to expect. That Mary and I could bounce off ideas between each other during the simulator runs was crucial to our success.”

Aragon was pleased that his model performed nearly as well on the physical track as it did in the simulator (9.3 seconds). “I was nervous on race day. The AWS reps warned us that the physical world was much different than the virtual world. The simulator is a perfect sandbox that doesn’t account for variations in light, shadows, uneven surfaces, friction, and other imperfections.”

According to Noble, “Participants were highly motivated by the competitive aspect of the event and wanted to take home the bragging rights. One group was so excited about the competition that they created a logo and made t-shirts with their team’s name—0010 Fast, 0010 Furious.”

Hackathon Hype

Computing’s hackathon tradition has taken root in a new generation of employees. Grzesiak, who co-organized and participated in the event for the first time, looks forward to future hackathons. Silva had heard about hackathons before being hired at LLNL and stated, “They were a selling point for me because they sounded like the times in college when I’d get together with others to code.” For Aragon, the hackathon was a bonding experience with his new team. He said, “It was really fun to treat it as a team activity. We rooted for each other.”

Since the October event, Noble has received numerous messages from participants about their experiences. “Many expressed that having a focus area for the hackathon was a welcome addition to the traditional hackathon format and hoped other topics, such as cyber security and virtual reality, would be incorporated in future hackathons. Another participant sent an email stating it was the best and most memorable hackathon ever and will be one of the highlights of her career,” she said.

The event exceeded Noble's expectations, particularly in terms of employee satisfaction. She continued, “Everyone involved found it to be a rewarding experience, especially those who were exploring ML for the first time. The hackathon exemplifies our creative and innovative culture by providing opportunities for our staff to step outside their boxes and discover new possibilities. One of the Lab’s greatest strengths is the diversity of opportunity with the ability for an employee to pivot their career in a completely different direction at any time.”

—Holly Auten