LLNL is home to the world’s largest Spectra TFinityTM system, following a complete replacement of the tape library hardware that supports Livermore’s unclassified and classified data archives and backup technologies. The new tape library systems help the Laboratory meet some of the most complex data archiving demands in the world and offer the speed, agility, and capacity required to take LLNL into the exascale era. In simple terms, a tape library is a storage system that contains a collection of tape drives, magnetic tape cartridges, a scanner, and robotic mechanisms that automatically coordinate the movement of cartridges to storage slots and tape drives on demand.

Large-Scale Data Archiving Demands

When people think of a data archive, most imagine an old cobweb-ridden, static storage room filled with unwanted data that people rarely access but are afraid to delete. Nothing could be further from the reality at Livermore. LLNL’s high performance computing (HPC) users produce massive quantities of data, averaging 30 terabytes every day. Users often spend weeks or months running simulations, which produce valuable data that cannot be left in ephemeral high performance storage; thus, the data gets stored in a tape library. Not only must the data be kept indefinitely, much of it is classified and requires protection with the highest standards of security. At all times during the day, hundreds of tape drives are storing, migrating, and recalling data in parallel.

Upkeep is demanding. As with all technology, data storage hardware becomes antiquated and expensive to maintain, thus requiring significant upgrades and maintenance at fairly regular intervals (every several years). A well-run archive is a constant maelstrom on the backend, including data migration to newer technology and smooth, uninterrupted responsiveness to user needs. All of these challenges are built into the maintenance process Livermore Computing (LC) has honed over the past six decades.

However, a wholesale hardware swap-out is a much larger, less frequent task, requiring years of planning, procurement, preparation, software development, data migration, and eventually equipment and media destruction. “Moving to a completely new tape library is an enormous undertaking that’s only done once every decade or two,” says Todd Heer, who oversaw the procurement process. “This particular procurement was even more notable because we chose a new vendor—Spectra.”

New Systems, New Capabilities

The new Spectra TFinity systems are novel for Livermore in two ways: First, the tape cartridges they contain are stored differently, in terapacks (that is, 9–10 cartridges per pack), which means that more data can be stored in a smaller footprint. “This physically denser storage solution has added hundreds of square feet of space back to the computing center,” says Debbie Morford, leader of LC’s Scalable Storage Group. “It provides agility in the center as we make space for new systems, like El Capitan.”

Second, the TFinity libraries come in a rack form factor (similar to traditional HPC systems, which hold their various components in multiple rack enclosures). This feature gives Livermore the option of growing the system, whenever necessary, simply by adding a frame or two. The current main configuration of the larger classified tape library is 23 racks, which contain 144 “enterprise” class tape drives and 19,620 slots and can store 294 petabytes of uncompressed data. The new system represents a 50% or better density improvement over outgoing LC tape media.

Several efficiencies were gained with the TFinity systems. For example, the new tape libraries’ fire suppression feature has reduced planned and unplanned outages. Also, combining archives and backups within the tape library saves valuable system administration time, as staff are able to work on one system rather than two.

Further, the Livermore team wrote an open-source command line utility, called the Spectra Logic Application Programming Interface, or SLAPI, to help LLNL (and other sites) monitor and manage Spectra Logic tape libraries. Morford says, “SLAPI is particularly significant because sites from around the world now have an easy interface to work with TFinity libraries.” (SLAPI is available at github.com/LLNL/slapi.)

A Pandemic-Sized Interruption

By early 2020, the process of installing the new tape libraries was moving along according to plan. The smaller, unclassified tape library had been deployed into production for more than six months, and the Scalable Storage Group had turned their focus to the more complex, larger-scale classified tape library. While much of the hardware setup and underlying software development work had been completed, the team was running up against several mechanical, hardware, and software challenges while beginning to move the data from the old classified library to the new one.

Then, COVID-19 hit. In March 2020, the entire LLNL workforce reverted to a work-from-home posture in response to the COVID-19 pandemic. “For weeks no one on the team stepped foot on site, which was where most of this work needed to occur,” says Morford. “Once proper safety precautions and processes were established, we had to plan our hardware visits with the utmost concern for the safety, health, and wellbeing of our staff. Things definitely slowed down.”

Still the team persevered and met an important Level-2 milestone for the National Nuclear Security Administration’s Advanced Simulation and Computing (ASC) Program. By May 2021, the classified tape library was up and running, with the remainder of the year focused on data migration—a process that occurs, remarkably, with no downtime to users.

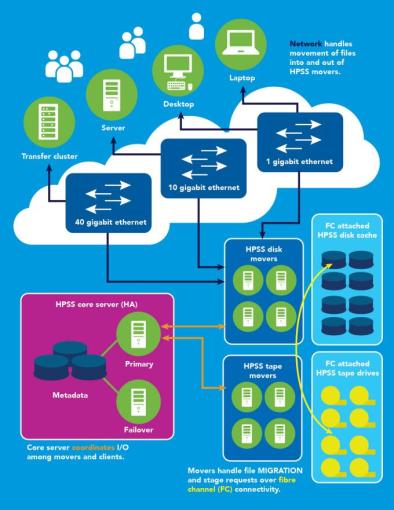

By September, the team had migrated close to 1.3 billion files and 88 petabytes of data. “The data migration process is always interesting, and the last 5 to 10 percent of cartridges are the hardest,” explains Morford. “Our High Performance Storage System (HPSS) application will migrate the data, but the drives and tapes have failures that need to be dealt with along the way. At one point, we had more than 40 cartridges migrating data simultaneously.”

A Plan for the Future

The sheer size, complexity, and importance of Livermore’s tape libraries require staff to plan for the long-term, even when budgets and technology advancements are unknown. Heer explains, “Our plan is to procure maintenance in five-year increments and add just enough racks to allow for near-future growth, which realizes efficiencies in cost and overall size. We’ll also be able to capitalize on ever-increasing tape-drive capacities. In time, the library capacity will multiply simply as a function of generational drive technology improvements.”

Also around the corner for the team is the careful and precise destruction of more than 14,000 cartridges containing classified media, as well as the dismantling of the old library racks in both unclassified and classified centers.

“We’re really proud of this new system and its significance to our HPC users today and in the future,” says Heer. The TFinity tape library is funded by the ASC Program and serves the tri-lab community.