Project Summary

Supercomputer applications face large, complex dataset problems from both the system (complex hierarchy, memory, and storage) and the workload. UMap is an open source user-level library that acts as a tier between the application, the complex datasets, and the system hierarchy.

UMap uniquely exploits the prominent role of complex memories in today’s servers and offers new capabilities to directly access large memory-mapped datasets. For high performance, UMap provides flexible configuration options to customize page handling to each application.

An mmap()-Like Interface

The UMap user-level library provides a high performance, application-configurable, unified-memory-like interface to diverse data located in storage, on a memory server, or distributed across a network. Its frontend provides a memory-like interface so that applications can access a virtual memory space just like accessing memory-based data in C.

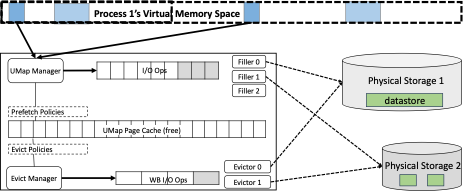

When an application accesses a virtual memory space created by UMap, the backend fetches pages of the data on demand. In the backend, a set of predefined handlers, such as a file-based handler, that are commonly used by HPC applications are in place so that the engineering efforts can be reused across applications. Moreover, if an application uses special-purpose data stores, the application developer can extend the backend handler to specify how data within the user-mapped memory space can be managed in terms of data transfer and marshalling between data stores and the physical memory.

A Set of Workers: Manager and Evictor

In contrast to traditional signal handlers, UMap includes a “Manager” function that listens for page-fault events on an asynchronous “userfaultfd” channel of messages from the Linux kernel. A collection of fillers satisfies page-fill requests queued by the Manager. Pages can be filled from various sources, both local and remote.

Fetched pages occupy a UMap page cache separate from the system page cache. As the cache fills up, an “Evict Manager” schedules eviction actions, which are carried out by a set of “Evictors.” The application can set the UMap page size (multiple of the default 4K page size), page cache size, prefetch policies to optimize data access, eviction policies, and the number of threads allocated as filler or evictor.

To ease the adaptation to application characteristics, the minimal block of data moved between datastore and buffer in the physical memory is abstracted as the UMap internal page size and can be explicitly controlled in user space without requiring system-level configuration. The page cache size is application-controlled, insulating the application from fluctuations in operating system allocations.

Adapting to Different Applications

By enabling scaling up or down the number of threads in fill and eviction groups, the UMap library can adapt to different application characteristics. For instance, for applications with high read/write ratio, more threads can be assigned as fillers than evictors. The fillers and evictors themselves are pluggable, providing extensibility in managing the data store. A default filler is provided for file-backed out-of-core data. A SparseStore filler optimizes for concurrent access to sparse data. A network filler accesses data stores on a remote server such as memory server. A “ZFP” filler automatically decodes and compresses floating point array data using the zfp compression library as an array element and is accessed in its uncompressed view.

Shielding Users from Complexity

By providing a simple interface to applications, the UMap library shields users from contending with the complexity of achieving scalability and coherence for read and write accesses at high concurrency, which is a common characteristic of multithreaded applications running on HPC systems with many hardware cores and threads.

Software

UMap is open source and is part of LLNL’s Memory-Centric Architectures project. It is available for download via GitHub.

Acknowledgement

This research was supported by the Exascale Computing Project (17-SC-20-SC), a joint project of the U.S. Department of Energy’s Office of Science and National Nuclear Security Administration.

This work was performed under the auspices of the U.S. Department of Energy by Lawrence Livermore National Laboratory under contract No. DE-AC52-07NA27344.