The multiphysics simulation codes used at U.S. national security laboratories are evolving, even as the goal remains constant: to model and ultimately predict the behavior of complex physical systems. To meet the needs of users, such codes (most of which were written many decades ago) must perform at scale on present and future massively parallel supercomputers, be adaptable and extendable, be sustainable across multiple generations of hardware, and work on general 2D and 3D domains.

To fulfill these requirements, Lawrence Livermore, Los Alamos, and Sandia national laboratories are developing next-generation multiphysics simulation capabilities through the Advanced Technology Development and Mitigation subprogram, under the National Nuclear Security Administration’s (NNSA’s) Advanced Simulation and Computing (ASC) Program, in collaboration with the Department of Energy’s (DOE’s) Exascale Computing Project.

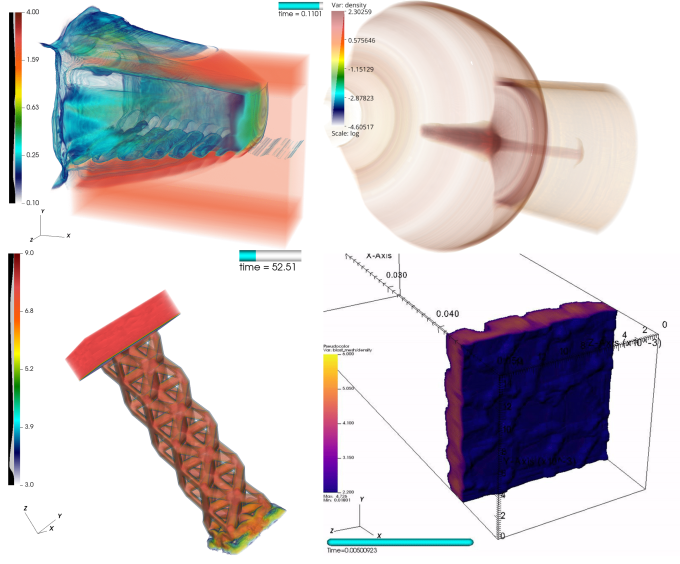

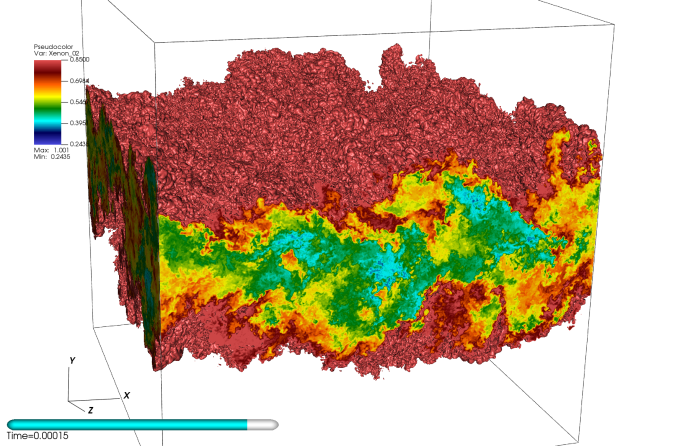

At LLNL, this work takes place under a massive software development effort, called the Multiphysics on Advanced Platforms Project (MAPP). The project incorporates multiple physics, mathematics, and computer science packages into one integrated code. MARBL is a multiphysics code developed through MAPP that will address the modeling needs of the high-energy-density physics (HEDP) community for simulating high-explosive, magnetic, or laser-driven experiments. Such experiments include inertial confinement fusion, pulse-power magnetohydrodynamics, equation of state, and material strength studies supporting NNSA’s Stockpile Stewardship Program.

Project lead Rob Rieben notes, “An important driver for MAPP’s creation in 2015 was the emergence of advanced high performance computing (HPC) architectures based on graphics processing units (GPUs). This big move in hardware system development was part of the national drive toward exascale computing platforms at DOE facilities. The MARBL code must be able to scale to these exaflop-class computers, while also scaling to the current 100-petaflop, pre-exascale systems. It’s a daunting challenge.”

MAPP Brings Modularity to Building Codes

To meet this scaling challenge, the team uses high-order numerical methods and a modular approach to code development. MAPP computer science lead Kenny Weiss explains that high-order numerical discretizations yield more accurate results, allowing for meshes with “curved-edge” elements, whereas low-order methods operate on straight-edged elements. The modular approach makes use of software libraries, which serve as software “building blocks” that can be customized and shared between codes, from research and prototype to production.

For example, Axom is a modular computer science software infrastructure that handles data storage, checkpointing, and computational geometry—components that form the foundation on which MARBL is built. Exemplifying the “extreme modularity” approach, MARBL was designed from the start to support diverse algorithms, including Arbitrary Lagrangian–Eulerian (ALE) and direct Eulerian methods for solving the conservation laws key to physics simulations.

These algorithms provide higher resolution and accuracy per unknown compared to standard low-order finite element methods. They also have computational characteristics that complement current and emerging HPC architectures. The higher flop-to-byte ratios of these numerical methods provide strong parallel scalability, better throughput on GPU platforms, and increased computational efficiency.

Collaboration Is Crucial for Success

Rieben and Weiss both stress that co-design and interdisciplinary collaboration are critical for the project’s success. MAPP is multidisciplinary in nature, requiring physicists, engineers, applied mathematicians, and computer scientists to work closely together.

Weiss explains, “For modular codes, we depend on experts outside our core team to contribute to our codebase. We established workflows to help with onboarding, making it easy to access, build, document and test the code. We also make extensive use of open-source libraries in developing MAPP software. For example, of the more than 4 million lines of code in MARBL, around 50 percent was developed from LLNL open-source libraries, and another 30 percent came from open-source community libraries.”

The team also created workflows for end users that streamline inputting data, running code, and iterating and analyzing results. For example, an LLNL-developed “in situ visualization” package lets users probe mesh fields while a simulation is running. Rieben says, “Since one problem can require thousands of simulations, users need to conduct analyses and modify parameters in real time, rather than having to wait until the end.”

Challenges Met, Challenges for the Future

In late 2020, as part of an NNSA ASC Level-1 milestone, MARBL was run on the Sierra GPU-based supercomputer and the Sandia Astra ARM-based supercomputer, successfully scaling to the entire Astra system and half of Sierra.

Rieben points out this accomplishment is all the more impressive given that a substantial portion of MARBL’s development and testing occurred in 2020 during the COVID-19 pandemic. During that year, MAPP also (virtually) hosted five summer students and funded three Ph.D. projects through the Weapons and Complex Integration Principal Directorate’s HEDP Graduate Fellowship Program. (As of 2023, WCI is now Strategic Deterrence.)

The MAPP team is now focused on upcoming challenges, including preparing codes for LLNL’s next-generation supercomputer, El Capitan, which comes online in 2023. The team is also refining tools that will enable the coupling of high-order meshes with libraries based on low-order meshes employed in current multiphysics production codes.

Another project is to create automated workflows that will use machine learning and optimization, allowing users to design an experiment in a more automated way. The timescale for developing massive codes is long; their useful lifespan is even longer.

“With MAPP, we are five years in on a decades-long project,” notes Rieben. “These codes are complex and require many years to develop, verify, and validate. It takes around eight years to stand-up, followed by a decade or two of use.”

And it doesn’t stop there. MAPP’s investments in modular infrastructure and data services will feed into future code development and be key in transforming today’s research and prototype codes into tomorrow’s production codes for the exascale era and beyond.