Machine learning (ML) has become an important part of many scientific computing projects, but effectively integrating ML techniques with massive datasets into large-scale HPC workflows is no easy task.

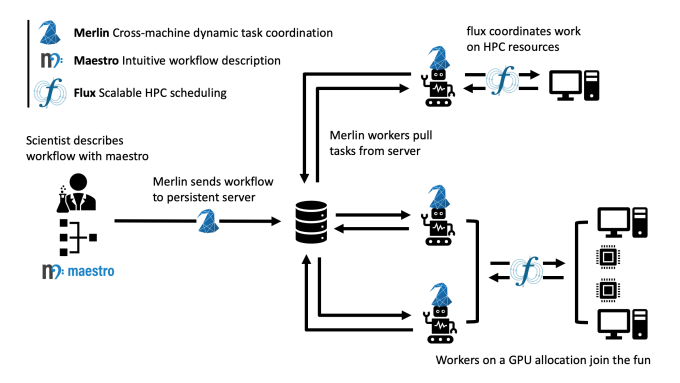

To address this, an LLNL team developed Merlin. Merlin coordinates complex workflows through a persistent external queue server that lives outside of users’ high performance computing (HPC) systems, but that can communicate with nodes on users’ clusters. This dedicated task management center serves as a pivotal component, ensuring the efficient execution of tasks within users’ workflows. Additionally, because of the distributed nature of the task server, Merlin allows workflows to run across multiple machines simultaneously.

By providing a centralized environment for task handling, the external task server optimizes resource allocation and enhances workflow performance. The tool scales easily, making it a great option for large-scale, ML-friendly workflows, which often require more concurrent simulations than a standard HPC workflow can typically execute.

Under the hood, Merlin uses Celery and Maestro to enable its workflow coordination. While Celery handles coordinating tasks and “workers”—executors that process the task—with the external server, Merlin tells Celery how many workers it needs to start, how many task queues to spin up, and which workers should be attached to which task queue. Meanwhile, Maestro creates a directed acyclic graph (DAG) delineating the dependencies between the various steps in a scientific process, and Merlin determines which of these steps can run in parallel.

This combination of Celery, Maestro, and an external task server means a Merlin workflow’s total execution time exhibits near-linear scaling. As computational workload grows, the execution time follows a direct and proportional relationship, rather than an exponential increase. Such linear scaling is a highly desirable characteristic in HPC environments. This scalability feature is instrumental in ensuring the system remains responsive and capable, ultimately enhancing its versatility and suitability for a wide range of computing challenges.

In addition to near-linear scaling, Merlin provides an iterative workflow approach, which allows it to run several variations of the same workflow that continuously build off one another. This feature is useful in a variety of scientific applications, including exploratory research, optimization, data analysis and modeling, simulation, and experimentation, and has been used by the inertial confinement fusion community, which has large workflows that require a long processing time. The large-scale workflows Merlin enables can also be used for training deep neural networks, and will be used to help test El Capitan’s capabilities when it comes online in 2024.

Merlin is open source and managed by the Workflow Enablement and AdVanced Environment (WEAVE) team at LLNL, who also manage Maestro.