Disclaimer: This article is more than two years old. Developments in science and computing happen quickly, and more up-to-date resources on this topic may be available.

The Department of Energy (DOE) launched the Exascale Computing Project (ECP) in 2016 to mobilize hardware, software, and application integration efforts in preparation for exascale-class supercomputers, which are capable of at least a quintillion (1018) calculations per second. In the years since, the DOE has launched the nation’s exascale era with Oak Ridge National Lab’s Frontier system, which will soon be followed by Argonne National Lab’s Aurora and Livermore’s El Capitan.

Enter the concept of co-design, which emphasizes collaborative research and development across the DOE complex as well as with other stakeholders. Co-design activities integrate the work of scientists, code teams, software developers, and hardware vendors to establish standardized solutions to common challenges while anticipating next-generation computing environments. Among the ECP’s several co-design teams is the Center for Efficient Exascale Discretizations (CEED, pronounced “seed”), led by LLNL computational mathematician Tzanio Kolev. As the ECP’s mandate formally concludes this autumn, so too does CEED’s.

CEED includes more than 30 researchers from Livermore, Argonne, and five universities: Rensselaer Polytechnic Institute, University of Colorado at Boulder, University of Illinois Urbana-Champaign, University of Tennessee, and Virginia Tech. They meet every year with the scientific computing community to describe CEED’s work, form new collaborations, and learn from each other. Livermore hosted the final annual meeting on August 1–3. (Read about event highlights in the article Meeting of the minds: advanced math for the exascale era.) In addition to Kolev, current LLNL contributors to CEED are John Camier, Veselin Dobrev, Yohann Dudouit, Ketan Mittal, and Vladimir Tomov.

The D in CEED

Bringing the world’s fastest computers online—and using their predictive and computational power to further the DOE’s missions—requires expertise and research in multiple disciplines, including the mathematical underpinnings of complex multiphysics applications. For example, the codes that simulate physical phenomena relevant to stockpile stewardship may work just fine on petascale computing systems like LLNL’s Sierra, but they also need to work on future generations of architectures. Retooling numerical algorithms in these large-scale codes is a crucial factor in ensuring exascale systems will be used to their full potential. CEED is the hub for the ECP’s activity in this area, bridging exascale-ready software technologies with hardware requirements.

The mathematics at the heart of this challenge are well known. Scientific and engineering problems abound with partial differential equations (PDEs), which are solved by numerical methods including high-order finite elements (FEM). Discretizing these elements into smaller, simplified parts improves an application’s accuracy while reducing its computational time. CEED’s discretization strategies have led to the development of a robust open-source software portfolio (visit CEED on GitHub for individual repositories).

Although CEED’s focus on improving high-order discretization algorithms is narrow, its impact is anything but. According to Kolev, the center has pushed the boundaries of what’s possible with high-order methods. He states, “We’ve proven the value and benefits of these methods. The software we’ve developed advances research in the high-order ecosystem including meshing, discretization, solvers, and GPU portability.”

These efforts have produced more than a hundred papers in addition to the center’s technical milestone reports, which are compiled into a publicly accessible compendium nicknamed “the ProCEEDings.” Each milestone report demonstrates algorithm development over time, engagement with application teams, and progress with optimization and porting to early access systems (smaller testbeds with the same architectures as exascale supercomputers). Kolev says, “Making our reports available to the scientific community has led to expansion of the work. We’re helping researchers at other organizations learn from our progress.”

Do the Math

CEED’s software portfolio includes FEM for fluid dynamics problems (Nek5000), scalable FEM discretization (MFEM), linear algebra libraries (MAGMA), and differential and algebraic equation solvers (PETSc), among other solutions. These projects implement various discretization techniques across a wide range of hardware configurations and scientific domains.

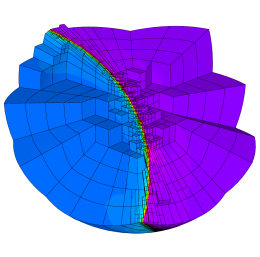

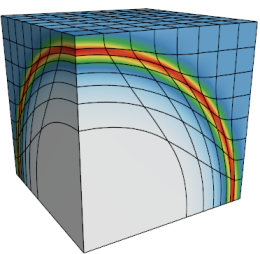

In one research focus, the CEED team has optimized the different types of meshes necessary for PDE-based simulations, as well as high- and low-order spaces and geometries. For instance, computations on meshes mapped from familiar 3D shapes (e.g., hexahedra, wedges, tetrahedra, pyramids) can be cached and partially assembled to efficiently use GPU computational resources.

Another important approach is adaptive mesh refinement (AMR), a process that dynamically modifies a solution’s accuracy while the application runs. AMR is particularly useful on unstructured meshes, which can accommodate almost any nonuniform 2D or 3D shape such as in a simulation of airflow around an aircraft’s wing. CEED’s AMR solution is decoupled from an application’s physics, which makes it flexible enough for use in multiple scientific domains.

CEED research also encompasses advanced linear algebra. Each element of a mesh has a tensor describing the relationship between objects and the space around them, such as a material’s stress at a given point. Reducing the order of the tensor—contracting it—helps shrink the number of necessary calculations in an application, but this process consumes computational time and memory. CEED’s linear algebra algorithms run batches of tensor contractions (and other routines) in parallel, optimizing their throughput on GPU-based architectures.

Another focus is on matrix-free solvers, which reduce memory usage during discretization. After analyzing different types of matrices, CEED researchers found that matrix-free methods provide cost-effective solutions for high-fidelity physics problems including radiation transport and inertial confinement fusion.

Recipes for Success

Ultimately, when a simulation’s underlying mathematical solutions run more efficiently, the entire application’s performance improves. Tim Warburton, director of Virginia Tech’s Parallel Numerical Algorithms research group and the John K. Costain Faculty Science Chair, points out, “A Venn diagram of CEED participants would show that everyone is concerned with developing highly performant software for exascale systems. Our team is world class in building simulation tools that can tackle stupendously difficult calculations and run efficiently at leading computing facilities.”

True to the co-design spirit, CEED contributors work closely with scientists in application areas of interest to the DOE, such as climate modeling, fluid flow, combustion processes, and other multiphysics problems. Accordingly, the center’s software development includes “CEEDlings”—open-source mini-applications serving as proxies for large-scale physics and numerical kernels. For example, ECP code teams and vendors use the mini-app Laghos to model the Lagrangian phase of compressible shock hydrodynamics.

CEEDlings also help improve aspects of high-order computation such as data storage and memory movement, and they have become important tools in exascale system procurement. “Mini-apps are easy for vendors to pick up and test implementations. Projects teams can easily experiment with a mini-app as a proof of concept,” says Kolev.

To encourage adoption of the CEED software ecosystem, the team has developed benchmark specifications, nicknamed “bake-off” problems, to test and compare the performance of discretization methods. Kolev explains, “The bake-offs establish a baseline where we all agree on what needs to be measured and how we define success. This allows us to compare different methods, code bases, machines, processors, and networks.”

The suite of benchmarks has been tested on several GPU architectures over the years, growing to address compiler speed, node performance, and other measurable parameters. Warburton adds, “The bake-off process was designed as a competition, with all the participating institutions sharing ideas and code so that the final software tools were all leveled up with new ideas, methods, and implementations.”

Together, CEEDlings and bake-off problems capture and standardize the unique requirements of high-order discretization algorithms. During the center’s tenure, refinements to these proxy apps and benchmarks have helped code teams and vendors develop better performing applications across diverse computing systems. “Porting software and applications to new hardware is difficult,” Kolev points out. “Again and again we’ve seen one team develop a new idea, another team improve it, and together we raise all the boats.”

'A Win for Everyone'

Performance portability of high-order FEM is one of CEED’s major success stories. “Application developers can rest assured they will achieve performance across all key architectures, and this performance is generally better per degree of freedom than with low-order methods,” states Jed Brown, a computer science professor from the University of Colorado at Boulder. “CEED showed that many messy, non-smooth, and highly nonlinear problems can be solved using this methodology, generally at higher efficiency than with standard methods.”

The CEED collaboration produced new software tools with tangible results at scale. For instance, NekRS combines CEED’s Nek5000 and libParanumal software libraries to provide solvers for computational fluid dynamics problems. Warburton says, “I have been truly impressed by the performance of NekRS on the many thousands of GPUs of Oak Ridge’s Summit and Frontier systems. NekRS has efficiently computed high-fidelity physics simulations of the coupled thermo-fluid and neutronics of small modular nuclear and pebble bed reactors on the whole of these machines.”

Not all of the center’s accomplishments are found within the 600 pages of milestone reports. Many students and postdoctoral researchers have started their careers with their CEED experience. For example, Brown’s students are working on or using CEED-based solvers in elasticity, plasticity, and other material models. At Virginia Tech, four postdocs and a graduate student contributed to CEED’s mathematical analysis, computational algorithms, software development, optimization, and physical modeling. Additionally, Warburton’s students visited national labs, gained experience with NekRS and libParanumal, and helped port codes to AMD (Advanced Micro Devices) GPUs.

With the ECP drawing to a close, what happens next for CEED’s software projects is an open question. “Community building has been one of the ECP’s great successes, and our biggest accomplishment is the team we created,” Kolev states. “Bringing this group together is a win for everyone. I hope we can continue collaborations in the future.”

—Holly Auten