Prominently situated in the massive exhibit hall at the International Conference for High Performance Computing, Networking, Storage, and Analysis (SC25), the Department of Energy’s (DOE) booth summarized all 17 national laboratories’ high performance computing (HPC) achievements and advancements. During the weeklong conference, the booth’s organizers managed a roster of speakers and technical demonstrations on a range of topics in HPC, quantum computing, and artificial intelligence (AI). LLNL staff claimed three spots on the booth’s SC25 schedule, giving conference-goers a chance to learn and ask questions in real time.

Flexible Containers

The award-winning Flux resource management software suite helps application teams run complex workflows on any HPC system. Its flexibility in scheduling hierarchical jobs means users can tailor their workflows to systems of different scales. Crucially, Flux supports container-based workflows, which combine a code and its dependencies into a package that can run in different environments—an increasingly popular setup for hybrid computing configurations.

At the DOE booth, Flux developer William Hobbs demonstrated how to launch Flux in a Docker container. “A container gives the resource management and scheduling capabilities a smaller footprint for developing locally,” he explained. Because Flux does not require elevated privileges to run, this setup works easily on a laptop for both the -core and -sched Flux repositories. Conveniently, all standard Flux commands work inside the container. (Elsewhere at the conference, team members hosted a detailed tutorial on Flux integration with Kubernetes.)

Hobbs also showed how to leverage another LLNL software library, mpibind, alongside Flux. mpibind maps scientific applications to individual HPC hardware resources by assigning an application’s abstractions to specific processor cores, thus improving the code’s portability and performance. Hobbs noted, “mpibind takes into account memory placement, something Flux’s native tools wouldn't necessarily have insight into, and tries to limit remote memory accesses.” Combining these complementary projects provides users with even more control over how their application workflow runs while improving performance.

Protein Structures at Exascale

AI technologies have transformed mission-driven research at LLNL, especially in combination with exascale computing. For example, machine learning (ML) models can predict how protein structures assemble and behave, which in turn helps scientists develop countermeasures for emerging biological threats.

In a collaboration with Advanced Micro Devices and Columbia University, LLNL’s ElMerFold project used the power of El Capitan and an extreme-scale ML framework to produce 3D structure predictions for more than 41 million proteins. As computer scientist Nikoli Dryden explained in a featured talk at the DOE booth, the team’s record-breaking AI inference workflow helped them generate distillation datasets of high-quality synthetic data, which are then used to train the ML model. “Experimental datasets alone are insufficient, and retraining models quickly is important for addressing future threats,” he noted.

The workflow was optimized for El Capitan by using the system’s unified processor memory as well as offloading input/output operations to the node-local Rabbits hardware before saving the data to the long-term Lustre file system. Dryden emphasized, “This approach is critical for scalability.” Incorporating Flux’s scheduling capabilities alongside ML model acceleration via the LBANN software, the ElMerFold workflow produced 2,400 protein structure predictions per second, scaling up to tens of millions in just a few hours. The project team plans to publicly release their 1.6-petabyte distillation dataset soon.

Agentic Simulation Workflow

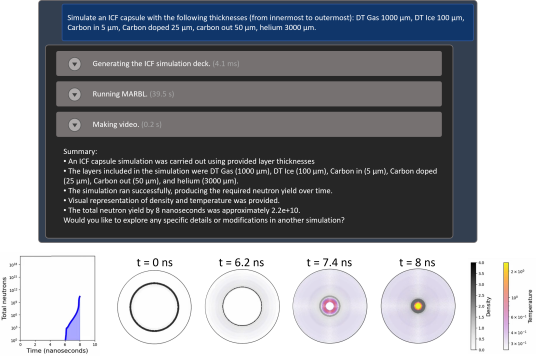

AI technologies have also gained traction in inertial confinement fusion (ICF) designs at LLNL’s National Ignition Facility (NIF). As Charles Jekel, a staff scientist in LLNL’s Computational Engineering Division, demonstrated, AI agents can help physicists investigate a vast design space and quickly narrow down viable designs for NIF experiments. “Our staff have fantastic ideas to improve yield at NIF and to meet our changing national security demands, and AI agents are a force multiplier on these ideas, helping to thoroughly explore and find better designs,” Jekel pointed out.

Jekel showed how to interact with a special user interface, similar to chatting with a large language model except in this case prompting it to run simulations with specific parameters. The model provides a visual or animated output along with suggestions for improvement, which the user can decide to implement in subsequent queries. Jekel’s demonstration included real-time results streamed from Livermore’s 10-petaflop Dane supercomputer to his laptop at the conference.

This iterative process is part of the LLNL-led Multi-Agent Design Assistant (MADA) project, which uses AI agents to create a design’s geometry, generate a simulation, summarize the results, and recommend additional solutions. For example, the user could change the thickness of an ICF capsule layer or an ingredient inside it, then ask the model how to increase the capsule’s total energy yield. Jekel noted, “The AI models have their own naïve understanding of complex physics like fusion energy. However, that understanding greatly expands when given the ability to execute our validated multiphysics simulations, which enable the AI systems to hypothesize and reason about why some designs perform better than others.”

Developing new ICF designs is intractable without HPC power or advances like MADA. “Understanding and exploring designs require simulation codes running on HPC resources, and interaction with AI is key because of the suggestions and explanations it can provide,” explained Jekel. “These solutions won’t replace people but instead augment them, given them better, time-saving tools for accomplishing our mission.”

Additional SC25 coverage:

- LLNL caps SC25 with HPC leadership, major science advances and artificial intelligence

- El Capitan retains title as world’s fastest supercomputer in latest Top500

- LLNL, UT & UCSD win Gordon Bell Prize with exascale tsunami forecasting

- LLNL honored at SC25 with HPCwire and Hyperion Research awards for computing excellence

— Holly Auten