High performance computing (HPC) systems use checkpoint and restart for tolerating failures. Typically, applications store their states in checkpoints on a parallel file system (PFS). As applications scale up, checkpoint-restart incurs high overheads due to contention for overloaded PFS resources. The high overheads force large-scale applications to reduce checkpoint frequency, which means more compute time is lost in the event of failure.

To alleviate these problems, we developed a scalable checkpoint-restart system, mcrEngine. mcrEngine aggregates checkpoints from multiple application processes with knowledge of the data semantics available through widely-used I/O libraries, e.g., HDF5 and netCDF and compresses them. Our novel scheme improves compressibility of checkpoints up to 115% over simple concatenation and compression. Our evaluation with large-scale application checkpoints show that mcrEngine reduces checkpointing overhead by up to 87% and recovery overhead by up to 62% over a baseline with no aggregation or compression.

Inter-process vs Intra-process Similarity

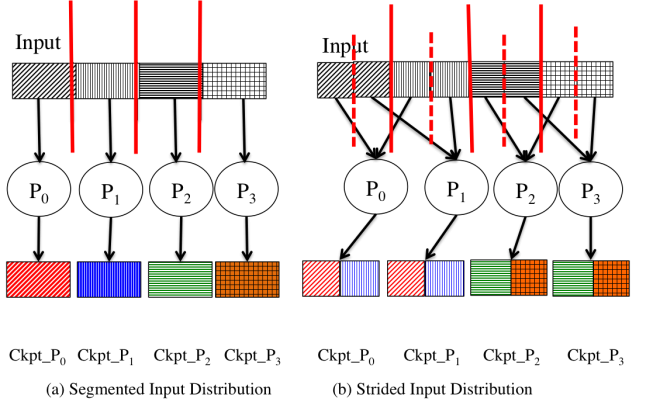

To the left is a high-level view of how input distribution can be responsible for introducing similarity in either intra- or inter-process checkpoints. Checkpoints generated from (a) has similarity in variables within a checkpoint than across process checkpoints. On the other hand, the opposite is true for checkpoints generated by processes in (b).

"Data-Aware" Compression

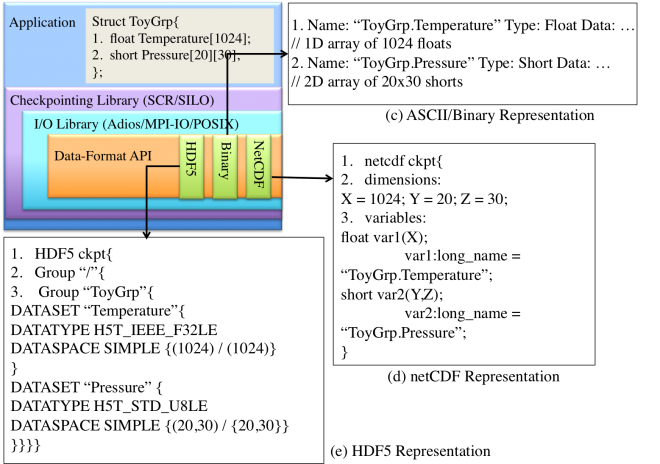

Essentially, we investigate this question: "If we knew better about the meaning of data actually written in these checkpoints, could we compress them more effectively?" Taking the meaning of data in order to better compress across-process checkpoints is what we call: "Data-Aware" compression. We extract meanings of data from checkpoints by looking at annotations for each variable provided by various high-level data description libraries, such as HDF5, netCDF etc. When application developers use these libraries to write checkpoints in portable formats, they provide us with potential clues to how we should view data that are written in these checkpoints. This makes our technique transparent to application developers already using these libraries to write their checkpoints. The rationale is that having all variables storing temperature data close together may improve compressibility of these checkpoint files that are otherwise known to be hard to compress. Figures (c), (d), and (e) illustrate different ways users can make semantic information available. A number of large-scale applications already use hierarchical data formats such as HDF5, netCDF to annotate their output files for better portability across multiple architectures. These applications can apply our technique to their data at no extra cost (programming).

Similarity-aware Checkpoint Aggregation + Data-aware Compression = mcrEngine

In addition to developing a new compression technique, we designed and implemented an end-to-end system called mcrEngine. During checkpointing phase, our system (1) reads checkpoints from disk after an application generates them, (2) aggregates checkpoints from multiple processes of the same application on an aggregator node, applies data-aware compression, and (3) writes a large merged-compressed data to PFS. In a failure scenario, mcrEngine performs the necessary steps in reverse to regenerate required checkpoints from PFS for an application to restart.

Publications

Tanzima Zerin Islam, Kathryn Mohror, Saurabh Bagchi, Adam Moody, Bronis R. de Supinski, and Rudolf Eigenmann, "mcrEngine: A Scalable Checkpointing System using Data-aware Aggregation and Compression," International Conference for High-Performance Computing, Network, Storage, and Analysis (SC) 2012, LLNL-CONF-554251.