High performance computing systems are growing more powerful by using more components. As the system mean time before failure correspondingly drops, applications must checkpoint frequently to make progress. However, at scale, the cost in time and bandwidth of checkpointing to a parallel file system becomes prohibitive. A solution to this problem is multilevel checkpointing.

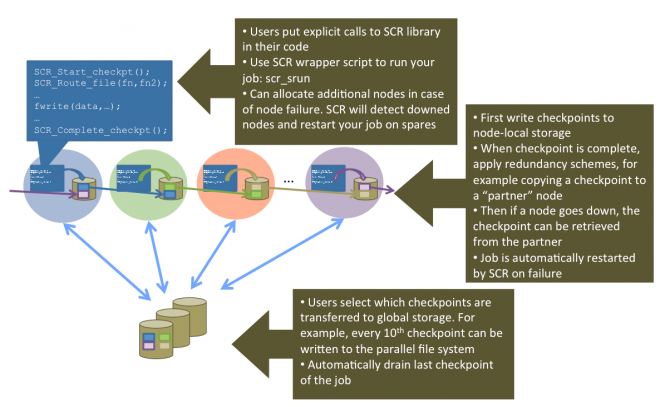

Multilevel checkpointing allows applications to take both frequent inexpensive checkpoints and less frequent, more resilient checkpoints, resulting in better efficiency and reduced load on the parallel file system. The slowest but most resilient level writes to the parallel file system, which can withstand an entire system failure.

Faster checkpointing for the most common failure modes uses node-local storage, such as RAM, Flash, or disk, and applies cross-node redundancy schemes. Most failures only disable one or two nodes, and multinode failures often disable nodes in a predictable pattern. Thus, an application can usually recover from a less resilient checkpoint level, given well-chosen redundancy schemes.

To evaluate this approach in a large-scale, production system context, LLNL researchers developed the Scalable Checkpoint/Restart (SCR) library. It has been used in production since late 2007 using RAM disks and solid-state drives on Linux clusters. Overall, with SCR, we have found that jobs run more efficiently, recover more work upon failure, and reduce load on critical shared resources such as the parallel file system and the network infrastructure.

The SCR framework has been successful at reducing overhead on today’s systems, but we need to develop new methods as we move forward to extreme scale computing. In general, our research efforts focus on reducing the overhead of writing checkpoints even further. We are exploring strategies such as a fast node-local file system written especially for checkpoint I/O, checkpoint compression, asynchronous checkpoint movement, and managing the use of hierarchical storage on future machines, e.g., using burst buffers as an intermediate step before reaching the parallel file system.