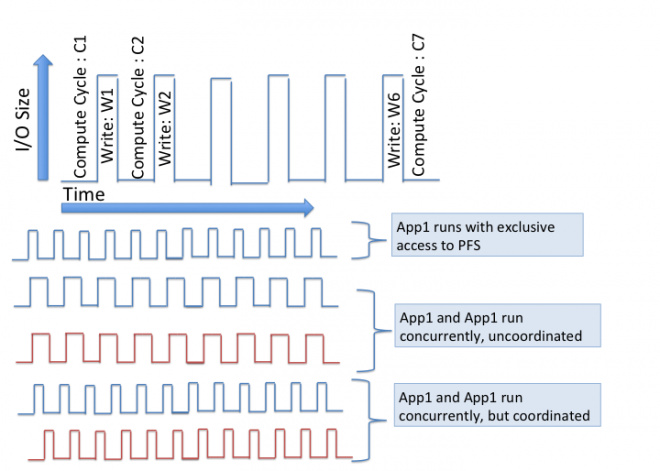

Scientific applications running on HPC systems share common I/O resources, including the parallel file system (PFS), and often suffer from inter-application I/O interference due to contention for shared storage resources. We explore mitigating I/O interference by coordinating I/O activities across applications. One example of this is scheduling applications such that the I/O cycle of one or a few applications overlaps with the compute cycles of others; thus, they will not access the PFS at the same time, reducing interference.

I/O Coordination

We observe that scientific applications often have predictable I/O patterns for the majority of their executions, so we can leverage these patterns to enable I/O coordination between applications. In the example to the left, we illustrate I/O coordination between two applications. By overlapping compute and I/O cycles between them, we can eliminate the I/O interference experienced by App1. App2 incurred a small overhead from the initial wait time needed to shift its phase such that App1 and App2 achieve the overlap.

I/O Coordination System

This is our system model for achieving coordinated I/O scheduling between applications. It uses a global I/O scheduler and local I/O coordinator to manage application I/O requests and to co-schedule applications. The local coordinator takes control over application I/O requests by intercepting its I/O calls. Then it communicates with the global scheduler in order to obtain scheduling decisions and execute the I/O requests.

Application I/O Pattern Detection

This is our framework for detecting execution patterns (compute and I/O cycles) of scientific applications. We collect and mine application I/O traces to understand I/O patterns. An I/O pattern can be extracted off-line or on-line while the application is running. We use detected execution patterns for achieving I/O coordination between applications.

Publications

Sagar Thapaliya, Adam Moody, Kathryn Mohror, Purushotham Bangalore, “Inter-Application Coordination for Reducing I/O Interference”, International Conference for High-Performance Computing, Network, Storage, and Analysis 2013 (SC13). LLNL-POST-641538